Mojo is a new programming language launched by Modular, focusing on AI/ML workflows. This article will discuss the Mojo programming language features and its usage.

Python is the most used programming language in artificial intelligence applications, because of its ease of use and rapid prototyping capabilities. Python still ranks top 1 in the TIOBE index. Mojo is a superset of Python programming, which means anyone who knows Python can easily code in Mojo. Mojo code is much faster than Python code. it combines the strengths of Python’s syntax with metaprogramming and systems programming, bridging research and production. The Mojo also benefits from tremendous lessons learned from other languages such as Rust, Swift, Julia, Zig, Nim, etc.

The Mojo already supports many features from Python including :

- async/await

- error handling

- variadics

We can consider Mojo as Python++. Mojo is still in the young state so it is missing some other features of Python. In the future, we can expect more features. However, Mojo solves the following Python language problems :

- Poor low-level performance

- Global interpreter lock (GIL) makes Python single-threaded (CPython)

- Python isn’t suitable for systems programming

- The two-world problem – building hybrid Python libraries(C/C++) is complicated (Tensorflow and PyTorch faced these kinds of challenges)

- Mobile and server deployment

Mojo program files have the file extension .mojo or 🔥 , yes emoji as an extension, for example, the hello world program file can be named as hello.mojo or hello.🔥

$ cat hello.🔥

def main():

print("hello world")To run the app :

mojo hello.🔥It will print the hello world message.

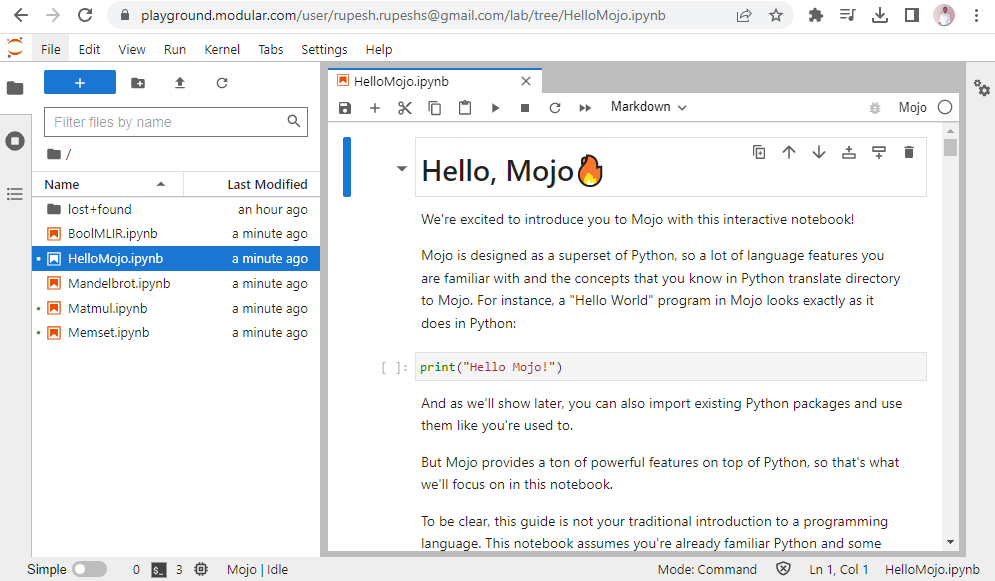

Mojo can be used as Jupyter notebooks. available in the Mojo Playground. You can request access to use Mojo Playground to try out the language.

Matrix Multiplication benchmark

To run the code as Python we can use %%python at the top of the notebook cell.

%%python

def matmul_python(C, A, B):

for m in range(C.rows):

for n in range(C.cols):

for k in range(A.cols):

C[m, n] += A[m, k] * B[k, n]

Benchmarking on a 128 x128 matrix.

- Adding type annotations – 1866x speedup over Python

- Vectorizing the innermost loop – 8361x speedup over Python

- Parallelizing Matmul – 14411x speedup over Python

We can see a huge performance boost (14400 times faster than Python).

Read about How to Set Up Google’s Carbon Programming Language on Ubuntu 22.04.