In this article, we will understand the concept of AI PC. We will also discuss OpenVINO and, how it can be used to create AI apps for AI PC.

Let’s first dive into the concept of AI PC.

What is an AI PC?

An AI PC (Artificial Intelligence Personal Computer), in simple terms, is a new type of personal computer designed with specific components to efficiently run powerful artificial intelligence (AI) software.

AI PC is a relatively new term in the field of computers. Let’s see its definition by Intel.

An AI PC has a CPU, a GPU and an NPU, each with specific AI acceleration capabilities. An NPU, or neural processing unit, is a specialized accelerator that handles artificial intelligence (AI) and machine learning (ML) tasks right on your PC instead of sending data to be processed in the cloud.

– Intel

Microsoft’s requirements for an AI PC

Microsoft’s definition of an AI PC :

AI PC should have a CPU, GPU, NPU, and it should support Copilot, also have a Copilot key.

– Microsoft

The Copilot key is a new addition to Windows PC keyboards introduced by Microsoft as part of their AI push for 2024. This key provides quick access to Microsoft’s AI-powered Windows Copilot experience directly from the keyboard.

CPU, NPU, GPU

Let’s understand the core processing components of an AI PC:

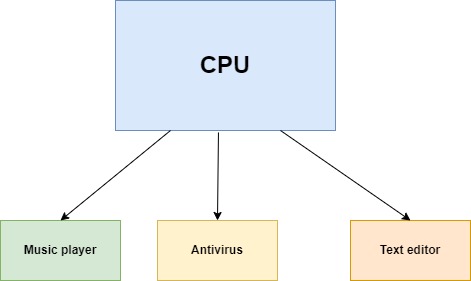

CPU ( Central Processing Unit)

We all know that a Central Processing Unit(CPU) is an Integrated circuit that handles general-purpose computing. The CPU’s performance is affected by factors such as clock speed, core count, cache size, and architecture design. Higher clock speeds, more cores, and larger cache sizes generally result in better performance. Artificial intelligence needs more computing power. Even though the CPU can handle AI workloads it will be slower when compared with a GPU or NPU.

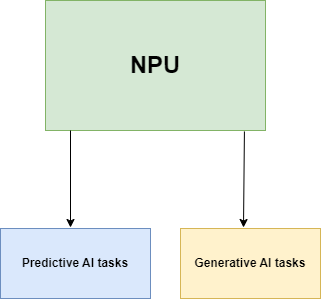

NPU (Neural Processing Unit)

Neural Processing Unit(NPU) is a specialized hardware accelerator that can handle Artificial intelligence (AI) and Machine learning (ML) workloads.NPUs are crucial for AI tasks, particularly in the realm of on-device generative AI, as they are optimized to handle neural network computations efficiently. NPU is especially good at low-power AI calculations. In 2017, Huawei was the first company to integrate NPU into smartphone CPUs.

Advantages of NPU over GPU

Here are some important advantages of NPU over GPU :

- NPU is specifically optimized for neural networks; it can perform better than CPU or GPU.

- NPU is specially designed for IoT(Internet of Things) AI or edge AI.

- NPUs are energy-efficient(long battery life in devices like smartphones and laptops) so they can be used for continuous AI workloads (For example real-time face recognition).

- NPUs integrate storage and computation through synaptic weights (synaptic weights refer to the connections between neurons in a neural network), improving operational efficiency compared to CPUs and GPUs.This integration results in higher computational performance and efficiency, making them more suitable for small devices and mobile devices.

- NPUs can complete the processing of a set of neurons with just one or a few instructions, while CPUs and GPUs require thousands of instructions to complete neuron processing.

- GPUs are primarily designed for graphics rendering whereas NPUs are best suited for AI processing.

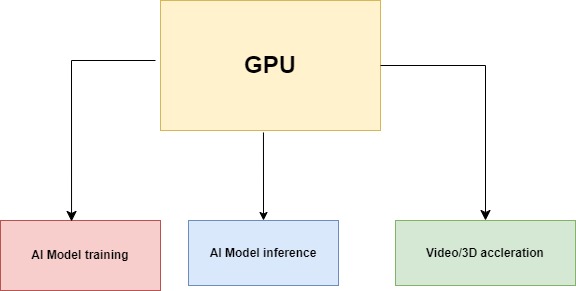

GPU (Graphics Processing Unit)

Graphics Processing Unit (GPU), is a specialized chip designed to accelerate computer graphics and image processing tasks. It is a programmable processor that is essential for rendering images on a computer screen, particularly for 3D animations and video. GPUs are also used for AI workloads, they are powerful and faster. Deep neural networks can be trained using GPU. GPU can handle generative AI workloads.

Intel Core Ultra Processors and AI PC

Intel Core Ultra processors represent the latest generation of Intel’s mobile processors, introduced in December 2023 as part of the 14th-generation Meteor Lake series. One of the key advantages of Intel Core Ultra processors is their support for artificial intelligence (AI) tasks. These processors are equipped with a neural processing unit (NPU) that provides low-power support for AI tasks, such as real-time language translation, audio transcription, image generation, and automation inferencing

If you are curious about the specifications of the Intel Core Ulta Proccesor check here.

Intel Core Ultra processor is best suitable for AI PCs as it focuses on :

- Intel AI Boost – Built-in AI acceleration with NPU and GPU

- Immersive Graphics – Gaming and video content generation using built-in Intel Arc GPUs

- Longer battery life – Efficient NPU and Arc GPU saves battery power

Intel Core Ultra processors are:

- Intel® Core™ Ultra Processor 9

- Intel® Core™ Ultra Processor 7

- Intel® Core™ Ultra Processor 5

Here is the comparison of these processors by Intel.

Use cases of AI PC

AI PCs can be used for normal workloads like playing music, word processor but AI PC excels in the following use cases :

- Locally running AI assistant

- Local stable diffusion (Text to image generation)

- AI-assisted image editing

- AI-assisted content creation

- Audio effects using AI ( For example, Audacity already introduced OpenVINO AI effects)

OpenVINO

OpenVINO (Open Visual Inference and Neural Network Optimization) is an open-source toolkit for optimizing and deploying deep learning models on Intel hardware, including CPUs, GPUs, NPUs, VPUs, and FPGAs. Intel initially released the OpenVINO toolkit on May 16, 2018. It provides a comprehensive set of tools and libraries to streamline the deployment process, ensuring efficient inference performance across various Intel platforms while maintaining high accuracy.

Using OpenVINO we can efficiently handle AI workloads on an AI PC. For example, consider the following use cases :

- Create copyright-free image generation locally on AI PC using OpenVINO (FastSD CPU)

- Locally running personal assistant using OpenVINO’s fast inference

- Chat locally with your documents using OpenVINO RAG (Retrieval Augmented Generation)

- AI-assisted Image, audio, and video editing

How does OpenVINO works?

OpenVINO converts models from various frameworks into an intermediate representation(IR) and then applies model optimization techniques like quantization, pruning, and graph transformations. The optimized model is loaded onto the OpenVINO Inference Engine, which automatically selects the best execution path based on the available hardware. It supports heterogeneous execution across multiple devices, enabling seamless switching between CPUs, NPUs, GPUs, VPUs, and FPGAs for optimal performance.

OpenVINO supports the following frameworks:

- Pytorch

- Tensorflow

- ONNX

- mxnet

- Keras

- PaddlePaddle

Here are some useful links :

- OpenVINO GitHub repository

- OpenVINO Jupyter notebooks

- OpenVINO quick start guide

- Awesome OpenVINO – A curated list of OpenVINO-based AI projects.

- OpenVINO good first issues – If you are interested in open-source project contributions please contribute to the OpenVINO repository.

Where to buy an AI PC?

We can buy Intel AI PCs from the following online shopping sites, please check the below links and view the available AI PCs:

Conclusion

Now we have a clear idea of AI PC. The combination of powerful AI PCs and Intel’s OpenVINO toolkit unlocks a world of possibilities for computer vision and generative AI applications.