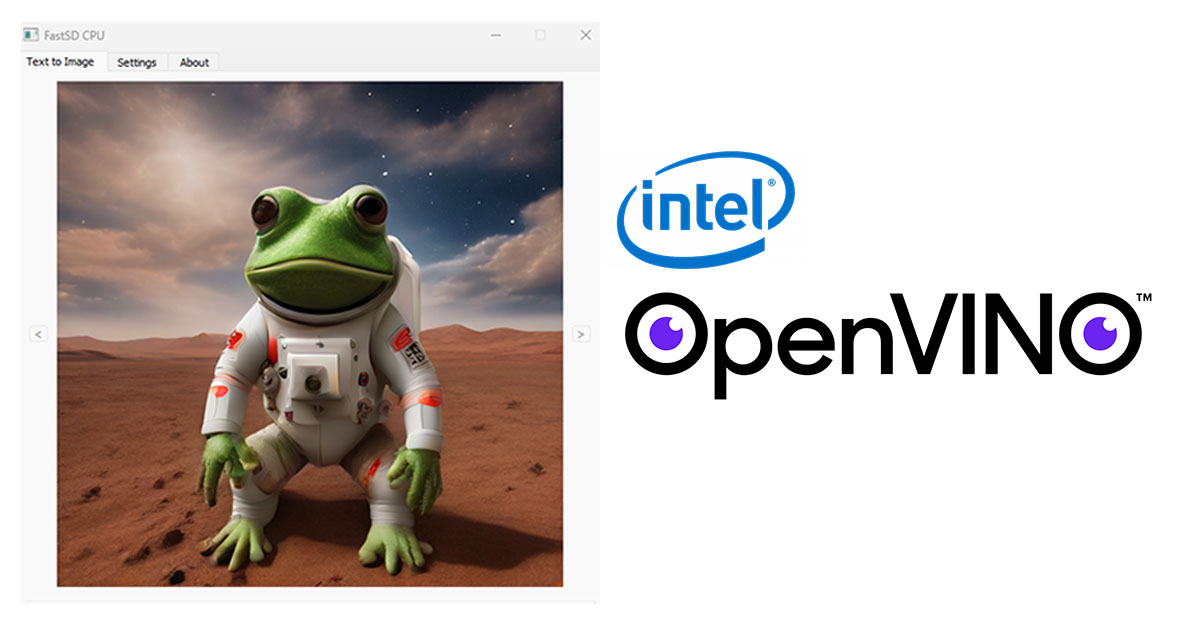

In this article, we will discuss a way to run fast stable diffusion on CPU using FastSD CPU. FastSD CPU is a software used to generate images from textual descriptions mainly on the CPU.

Stable Diffusion is an AI model that can generate images from text descriptions. It works by starting with a random noise image and then slowly refining it until it matches the description. Normal stable diffusion image generation takes 50 steps to generate a good-quality image, it is a slow iterative process. A good GPU is necessary for text-to-image generation, but FastSD can generate images without a GPU.

Latent Consistency Models (LCM)?

FastSD is based on Latent Consistency Models. The latent consistency model is a type of stable diffusion model that we can use to generate images with only 4 inference steps. Fast SD CPU leverages the power of LCM models and OpenVINO.

The latent consistency model was first introduced by Simian Luo Et al. Read LCM arXiv research paper.

FastCPU inference with OpenVINO

The OpenVINO stands for Open Visual Inference and Neural Network Optimization. It is a toolkit provided by Intel to optimize and deploy deep learning models on various Intel hardware platforms. OpenVINO enables improved performance and efficiency by utilizing hardware acceleration features such as Intel CPUs, GPUs, FPGAs, and VPUs.

FastSD CPU uses OpenVINO to increase the inference speed of the text-to-image generation process.

LCM models Inference speed

The following tests were performed on FastSD running on an Intel Core i7 CPU.

- Image resolution – 512 x512

- Model – SimianLuo/LCM_Dreamshaper_v7

- Inference steps: 4

| Fast SD Diffusion Pipeline | Latency |

| Pytorch | 20 seconds |

| OpenVINO | 10 seconds |

We can see a 2x speed boost when using the OpenVINO pipeline.

LCM-LoRA – A universal stable diffusion acceleration module

Latent Consistency Models (LCMs) have been successful in accelerating text-to-image generation, producing high-quality images with minimal inference steps.LCM-LoRA serves as a universal accelerator for image generation tasks, which means we can use LCM LoRA models with any other existing stable diffusion models to generate images with only 4 steps! There are 3 LCM LoRA models are currently available :

- lcm-lora-sdv1-5 – Compatible with SD 1.5 or any SD 1.5 fine-tuned models

- lcm-lora-sdxl – Compatible with SDXL or any SDXL fine-tuned models

- lcm-lora-ssd-1b – Compatible with SSD-1B or any SSD-1B fine-tuned models

Read the LCM-LoRA arXiv research paper here.

FastSD supports LCM-LoRA models and OpenVINO LCM LoRA fused models.

How to create LCM-LoRA OpenVINO models?

You can convert LCM-LoRA models to OpenVINO using this lcm-openvino-converter script. Currently, two pre-converted models are available for OpenVINO LCM-LoRA.

- LCM-dreamshaper-v7-openvino by Rupesh

- LCM_SoteMix by Disty0

You can directly use these models with FastSD CPU or use the following code to infer using optimum-intel library.

Install the libraries :

pip install optimum-intel openvino diffusers onnxDo the OpenVINO fast inference!

from optimum.intel.openvino.modeling_diffusion import OVStableDiffusionPipeline

pipeline = OVStableDiffusionPipeline.from_pretrained(

"rupeshs/LCM-dreamshaper-v7-openvino",

ov_config={"CACHE_DIR": ""},

)

prompt = "Self-portrait,a beautiful cyborg with golden hair, 8k"

images = pipeline(

prompt=prompt,

width=512,

height=512,

num_inference_steps=4,

guidance_scale=1.0,

).images

images[0].save("out_image.png")

Adversarial Diffusion Distillation – SD Turbo and SDXL Turbo

FastSD CPU supports SDTurbo and SDXL Turbo models. We can generate high-quality images using only 1 step using SD Turbo and SDXL Turbo models. These models are based on adversarial Diffusion Distillation (ADD), it uses three powerful techniques :

- Diffusion models: Slowly refine noise into an image.

- GANs: Force images to be realistic.

- Distillation: Learn from a heavyweight “teacher” model efficiently.

You can read more about Adversarial Diffusion Distillation and SD Turbo models here.

These 1 step turbo models are intended for research purposes only.

SD Turbo and SDXL turbo models for OpenVINO

SD turbo and SDXL turbo models are available for OpenVINO inference.

- SD Turbo OpenVINO – rupeshs/sd-turbo-openvino

- SDXL Turbo OpenVINO int8 – rupeshs/sdxl-turbo-openvino-int8

ADD models Inference speed

The following tests were performed on FastSD running on Intel Core i7 CPU.

- Image resolution – 512 x512

- Inference steps: 1

SDXL Turbo

| Fast SD Diffusion Pipeline | Latency |

| Pytorch | 10 seconds |

| OpenVINO | 5.6 seconds |

SD Turbo

| Fast SD Diffusion Pipeline | Latency |

| Pytorch | 7.8 seconds |

| OpenVINO | 5 seconds |

Sample inference code using optimum-intel library.

Install the libraries :

pip install optimum-intel openvino diffusers onnx

from optimum.intel.openvino.modeling_diffusion import OVStableDiffusionPipeline

pipeline = OVStableDiffusionPipeline.from_pretrained(

"rupeshs/sd-turbo-openvino",

ov_config={"CACHE_DIR": ""},

)

prompt = "a cat wearing santa claus dress,portrait"

images = pipeline(

prompt=prompt,

width=512,

height=512,

num_inference_steps=1,

guidance_scale=1.0,

).images

images[0].save("out_image.png")

OpenVINO and TAESD

Inference speed can be further increased by using OpenVINO and TAESD(Tiny AutoEncoder for Stable Diffusion). FastSD supports TAESD which further increases the speed of inference. TAESD allows almost instant decoding of images.

OpenVINO Tiny Auto coder models are also available at HuggingFace:

- TAESDXL OpenVINO – rupeshs/taesdxl-openvino

- TAESD OpenVINO – deinferno/taesd-openvino

The following tests were performed on FastSD running on Intel Core i7 CPU.

- Image resolution – 512 x512

- Inference steps: 1

| Model | Latency |

| SD Turbo – OpenVINO | 1.7 seconds |

| SDXL Turbo – OpenVINO | 2.5 seconds |

Using SDTurbo with TAESD we can get a 12x speed boost!!!

Fast SD CPU available for Windows, Linux, and Mac. It also supports Qt-based GUI (default), WebUI, and CLI.

FastSD near real-time image generation on CPU

FastSD can generate images near time (FastSD Experiment) using the script start-realtime.bat. It is based on the OpenVINO fast inference. You can read more details here.

FastSD CPU installation

Fast SD CPU can be installed by following the steps described here :

Text to image generation 2021 vs 2023

This is a text-to-image generation comparison/evolution.

Left image(2021) – I created it using a high-end Nvidia Tesla T4 GPU(Google Colab), it was very computationally intensive and slow

Right image(2023) – Created using FastSD CPU

Conclusion

In conclusion, now we can create text to images using only CPU power (Thanks to LCM/ADD/SD Turbo/OpenVINO). FastSD CPU is the easiest way to run stable diffusion on the CPU. Easy way to run Stable Diffusion XL on Low VRAM GPUs.