Stable Diffusion XL is a generative AI model developed by Stability AI. In this tutorial, we will discuss how to run Stable Diffusion XL on low VRAM GPUS (less than 8GB VRAM).

StableDiffusion XL is designed to generate high-quality images with shorter prompts. The model can generate large (1024×1024) high-quality images.

System Requirements

We need to ensure the following :

- System RAM – 12 or 16GB

Download DiffusionMagic

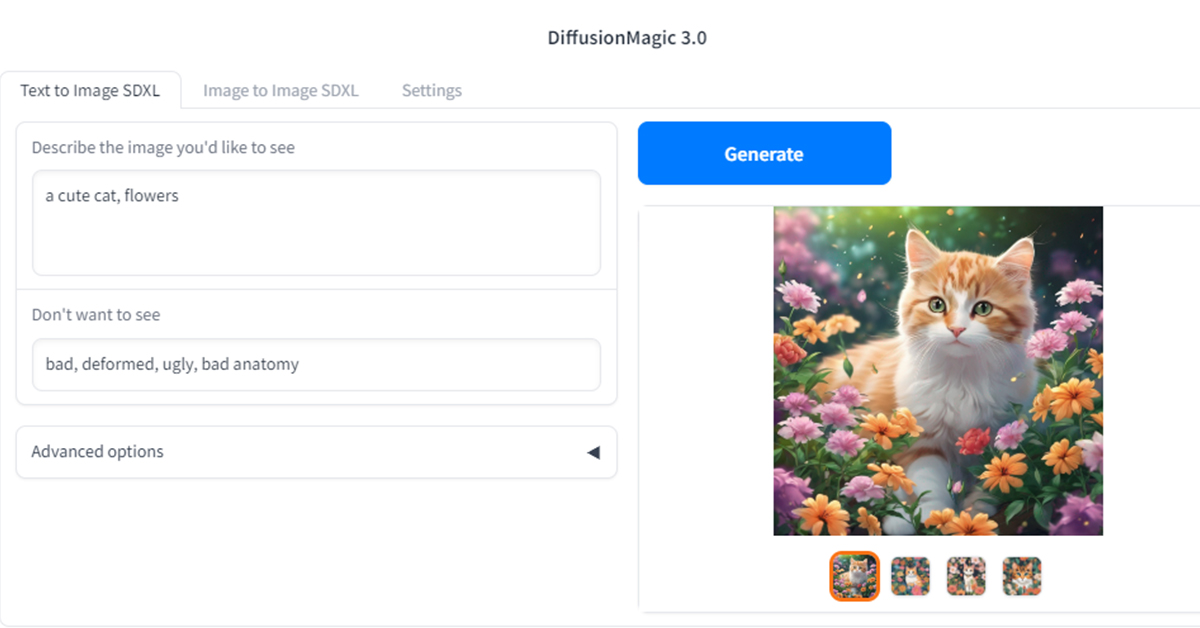

DiffusionMagic is simple to use Stable Diffusion workflows using diffusers. Diffusion Magic version 3 or higher supports StableDiffusion XL. Diffusion magic support the following workflows :

- Text to image SDXL

- Image to image SDXL

- Image variations SDXL(Give one image, and generate variations of it)

Download the release from itch.io.

OR

Download from GitHub releases

Install DiffusionMagic

To install DiffusionMagic run the install.bat file on Windows. Wait for the installation to complete. It will take some time to download the packages and installs them.

Start DiffusionMagic

After the installation, we can start the DiffusionMagic.

- Double-click on the start.bat file

- Next, open the address on the browser http://127.0.0.1:7860/.

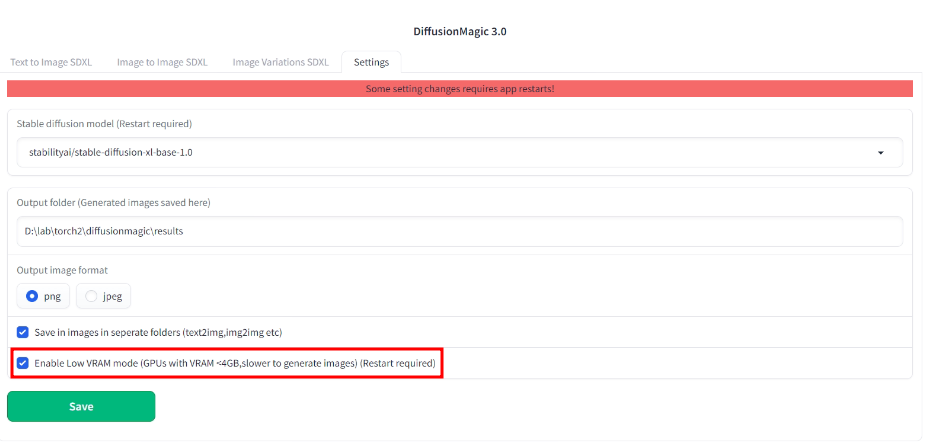

- Then open the settings tab and enable low VRAM mode as shown below.

- Click the save button to save the settings.

- Finally, close the console window.

- Again start the app by double-clicking the start.bat file.

Conclusion

There you have it simple way to run stable diffusion XL on low VRAM GPUs. Easy Way to Run Stable Diffusion XL on Colab using DiffusionMagic.